Creating Dreamlike Art with Neural Networks: A Comprehensive Guide

Written on

Chapter 1: Introduction to Neural Networks and Art

This guide explores how to produce mesmerizing art using artificial neural networks.

P.S. This banner was designed by me.

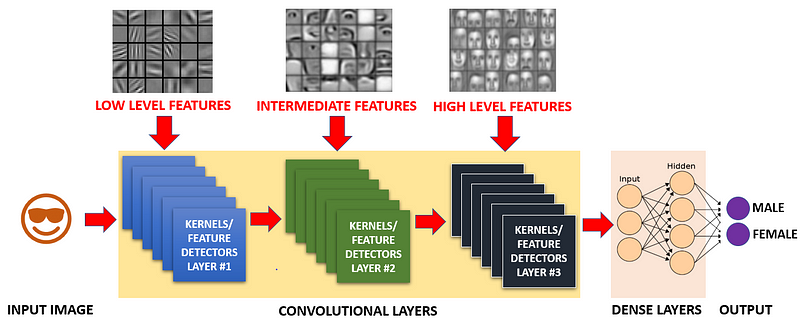

The convolutional neural network (CNN) typically consists of multiple stacked layers. When images are input into the network, they traverse through these layers, culminating in a decision by the output layer. However, several questions arise: How do the layers interact with one another? What does each layer perceive? What kind of information is exchanged between layers?

Visualizing the output of a specific layer by enhancing the input image can aid in understanding the processes within each layer of the neural network. A well-trained CNN progressively identifies features in the input image: the initial layers may focus on edges, the intermediate layers on shapes, while the final layer synthesizes this information to make decisions.

But what happens if we repeatedly feed the network its own output?

The result is a continuous flow of images that exhibit a dream-like quality. The concept of "Deep Dream" was introduced by Alexander Mordvintsev. This algorithm iteratively modifies the input image to activate certain neurons within a layer more strongly, resulting in artistic imagery.

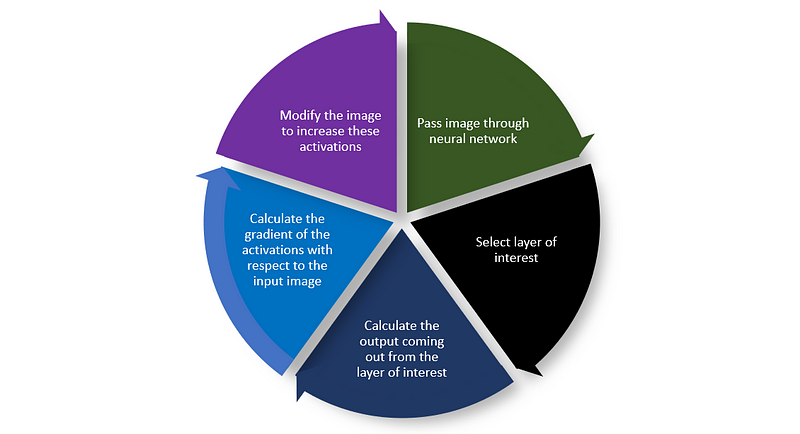

To generate these surreal effects, the following steps are generally involved:

In this guide, we will demonstrate an iterative technique by feeding the outputs of selected layers back into the network as input, combined with zooming and filtering techniques to enhance the effects further.

Chapter 2: Implementing Deep Dream

The article utilizes an Inception-ResNet-v2 convolutional neural network to create deep dreams using pre-trained weights. This network consists of 164 layers that have been trained on over a million images across 1,000 categories.

Load the Model

The initial step involves downloading the model and selecting layers of interest. Here, we will choose three specific locations: early layers, mid-level layers, and final layers. The following code snippet illustrates the process of downloading the model and selecting layers.

Maximizing Loss

In standard neural network training, the objective is to minimize errors through gradient descent. Conversely, in the Deep Dream algorithm, we aim to maximize these errors using gradient ascent, which feeds the output back into the network. This feedback loop results in an over-interpretation of the image, akin to a child's imaginative interpretation of cloud shapes.

The first step in the gradient ascent process involves calculating the loss function and deriving the gradient concerning the image.

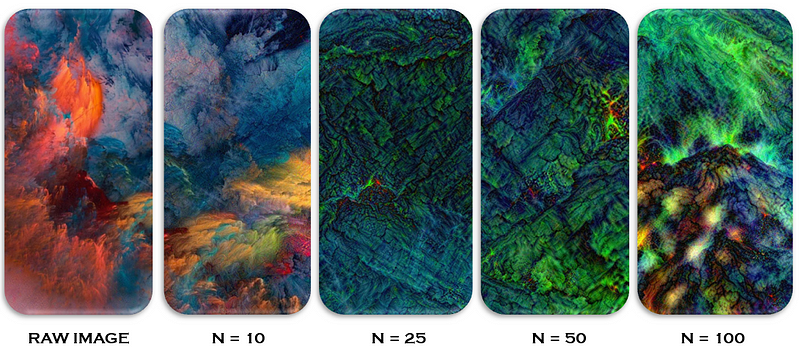

The next step entails updating the image by incorporating the calculated gradient. Over time, this causes the neural network to begin over-interpretation, leading to "hallucinations" within the artwork.

The following code showcases the functions that calculate loss (calc_loss) and subsequently apply this loss to the input image (deepdream).

Iterate

After defining the error-maximizing functions, we will call these functions within a loop to repeatedly process the image.

Results

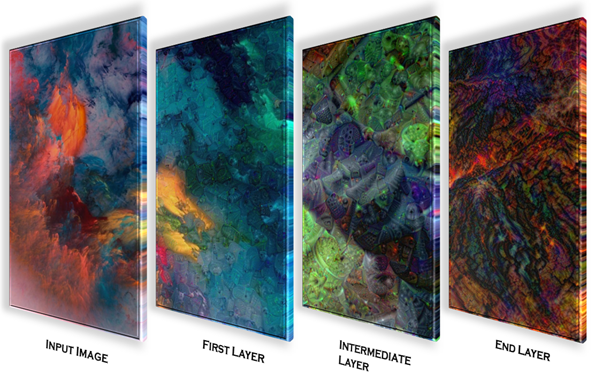

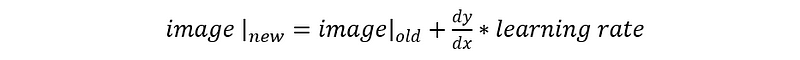

The subsequent figures illustrate how patterns emerge within the image as the number of iterations increases across different layers. Early layers tend to reveal more edges and strokes, as they are sensitive to fundamental features.

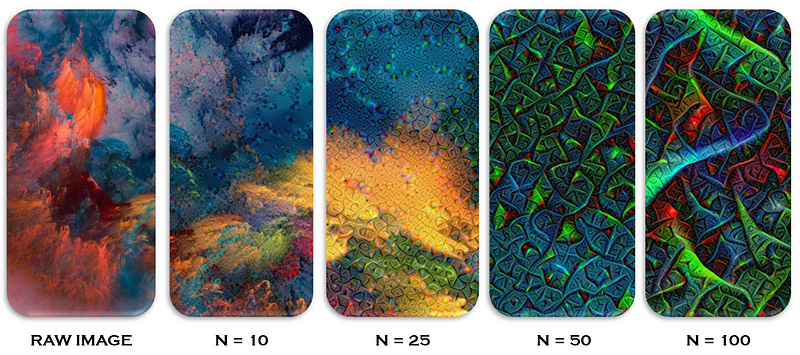

In contrast, intermediate layers yield more intricate structures.

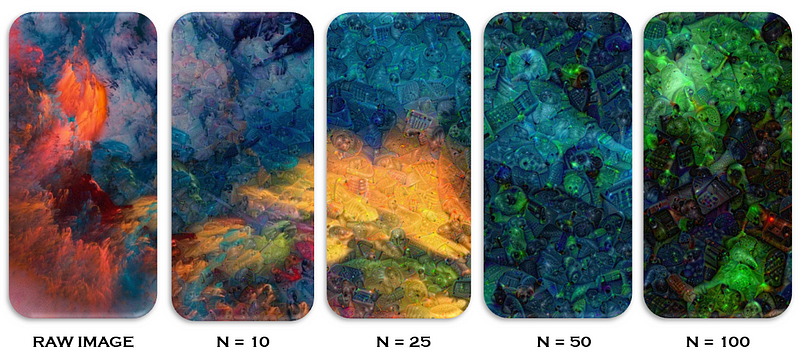

The final layers produce even more elaborate formations.

Creating a Psychedelic Video

After completing 2,000 iterations using the last layers of the network, I compiled the images into a video. While various tools exist for this purpose, I opted to use Python for generating the video at 24 frames per second. The code below demonstrates how to convert a sequence of images into a video using Python.

The resulting video is available on YouTube; you can check it out here:

Deep Dream serves as an exceptional method for understanding the functionality of different layers within a neural network. Additionally, the amplification achieved through gradient ascent techniques results in unique patterns, making it a valuable tool for artists.

I hope you found this guide enjoyable. If you have any questions or feel I missed something, please don't hesitate to reach out on LinkedIn or Twitter. For all resources referenced in this article, follow this GitHub link.

Cheers!

Rahul