Understanding Explainable AI: Evaluating Machine Learning Models

Written on

Chapter 1: Introduction to Explainable AI

In the realm of artificial intelligence, understanding the reasoning behind the decisions made by machine learning models is crucial. This is where explainable AI (XAI) comes into play.

The Importance of Explainable AI

Consider a self-driving vehicle that misinterprets an object, potentially leading to an accident. In such scenarios, the question arises: who is accountable for the mishap—the passenger, the software developer, or the manufacturer? Similarly, in a cancer detection system, if healthy tissue is misdiagnosed as malignant, it could lead to unnecessary distress for the patient. Furthermore, in mortgage evaluations, understanding why a loan was denied can empower individuals to rectify their financial issues. These situations highlight the necessity of transforming AI from a "black box" into a more transparent, "white box," especially when it comes to explainability.

Evaluating Machine Learning Models

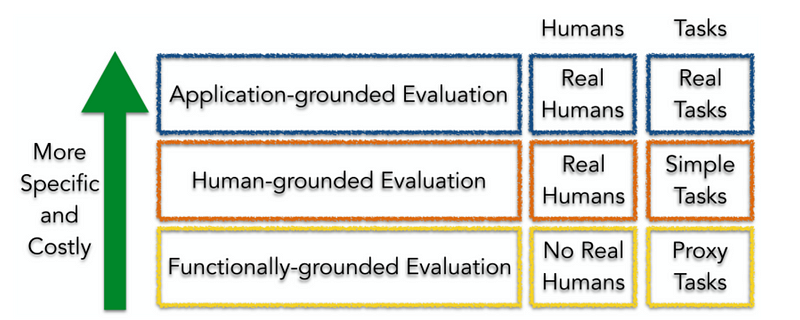

Assessing the performance of machine learning models typically relies on accuracy metrics. However, when it comes to explainable models, the focus shifts to human comprehension, which can yield multiple valid interpretations instead of a single correct answer. Doshi-Velez and Kim propose three distinct evaluation frameworks: application-grounded, human-grounded, and functionally-grounded evaluations.

Application-Grounded Evaluation

This method is straightforward but can be resource-intensive. It involves human experts assessing the outputs of explainable models to determine the quality of the explanations provided. This is particularly effective in high-stakes fields such as medicine, where a doctor may evaluate a model that identifies cancerous cells, assessing whether the provided rationale is both clear and informative.

Human-Grounded Evaluation

Similar to application-grounded evaluation, this approach focuses on simpler tasks that are more accessible to the general public. For instance, utilizing the MNIST dataset allows individuals to evaluate how well explanations correspond to digit recognition tasks. This method can inform developers about what constitutes effective explanations, although it may not necessarily validate their accuracy.

Functionally-Grounded Evaluation

In contrast to the previous methods, functionally-grounded evaluation does not involve human input. Instead, it relies on formalized proxy tasks to test explainability, thus requiring fewer resources. The challenge lies in selecting appropriate proxy tasks, but once identified, the evaluation process becomes more straightforward.

Chapter 2: Exploring Explainable Models

As we delve deeper into explainable models, it is essential to highlight some prominent techniques.

The first video, "Stanford Seminar - ML Explainability Part 4: Evaluating Model Interpretations/Explanations," examines the various facets of model evaluation in explainable AI.

The second video, "Model Interpretability and Explainability for Machine Learning Models," discusses the significance of interpretability in machine learning.

Local Interpretable Model-agnostic Explanations (LIME)

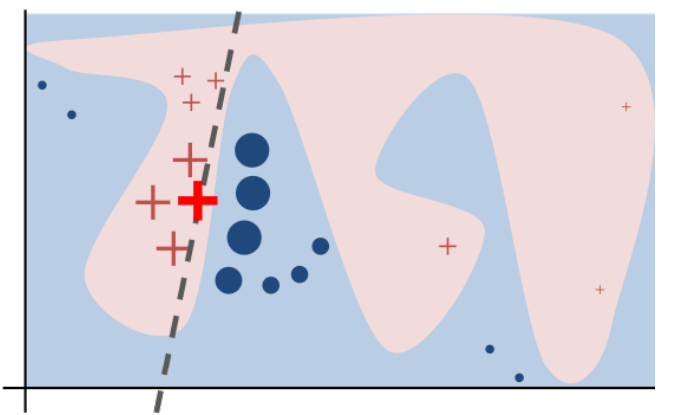

LIME is a versatile tool that provides explanations for any classifier model by constructing a locally faithful interpretable model. The following illustration depicts the core concept of LIME:

The highlighted red sample is explained by examining similar values to identify the most critical features influencing the classification. One of LIME’s main advantages is its compatibility with various classifier types, offering flexibility to developers.

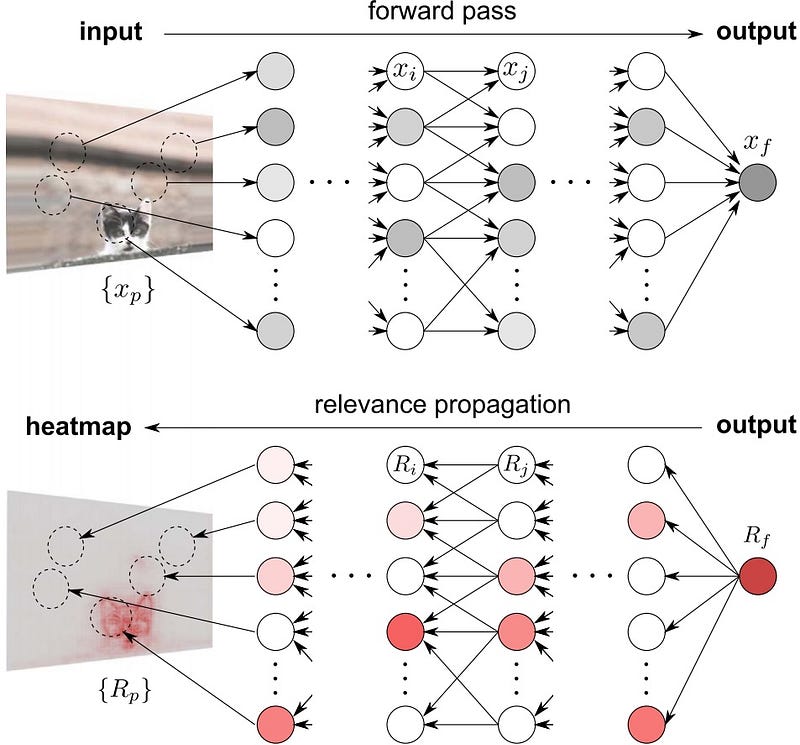

Layer-wise Relevance Propagation (LRP)

LRP is designed for models with layered architectures, such as deep learning networks. It assigns relevance scores to each layer by propagating backward through the network.

This method produces a salience map that highlights the most significant input values, making it suitable for tasks like image classification.

Deep Learning Important Features (DeepLIFT)

DeepLIFT is specifically tailored for neural networks, focusing on identifying important features by comparing each sample against a reference state. This unique approach allows for effective feature propagation even through layers where gradients are zero.

In conclusion, the evaluation of explainable AI models is critical for their acceptance and integration into various industries. By employing different evaluation frameworks and explainable techniques, stakeholders can ensure that AI systems operate transparently and reliably.