Reimagining My Data Infrastructure: Lessons Learned and Future Steps

Written on

Chapter 1: Introduction to Data Infrastructure Overhaul

Have you ever felt daunted by the thought of designing your own data infrastructure? The key is to start small. You can kick off your journey with my complimentary, 5-page guide on data project ideation.

Data Doodling: Conceptualizing System Design on Paper

One of the advantages of creating and managing your own systems is the unparalleled freedom it offers. You have the liberty to determine the logic behind your infrastructure, decide which data to collect, and choose how to present that data to your audience.

However, this freedom can come with a significant downside: a lack of accountability. This can lead to a situation where you notice issues in your foundation and think, "I'll address that later." No manager or stakeholder is likely to point out that you are running multiple similar processes across different virtual machines or that your processing time could be enhanced by using materialized views. Ultimately, it's up to you to acknowledge these problems.

When the moment finally arrives for you to tackle these issues, the task can escalate from simply patching up problems to a comprehensive system overhaul. So, what do you do next?

In my own experience, I set up my system rather quickly after months of procrastination. Using some spare time in a peaceful French apartment, I built an analytics pipeline to help extract insights for more impactful writing, which I aimed to share with an audience that would appreciate and benefit from it.

The challenge, however, was the sheer volume of work involved, and making substantial changes now seems even more daunting. With motivation and focus in scarce supply, the last thing I want to do is dive into an Integrated Development Environment (IDE).

To harness the power of documenting my thoughts, I began compiling a wish list on my phone. Yet, while this list served as a reminder for tasks, it lacked the comprehensive overview that a more formal representation would provide.

I also recognized that using software to create an architecture diagram might make the process seem overly formal, potentially hindering my ability to accurately convey the components involved. Thus, I opted for a simple notepad to sketch a rough yet truthful depiction of my basic data mart, including upstream sources, staging resources, downstream storage, and the dashboards I receive via email.

While I will delve into some specifics of my process, my primary goal is to illustrate the importance of reviewing underlying processes and reconsidering past decisions to develop a more efficient, scalable, and less intimidating system—even if it’s just for a single viewer.

Section 1.1: My Current Data Infrastructure

This particular system, unlike those I’ve constructed in a professional context, relies on a limited number of upstream sources. I don’t connect to large raw data sources like Google Analytics; instead, I utilize APIs from three key platforms: Medium, ConvertKit, and Stripe.

Each source has its own cloud function for data loading. For those interested, I have documented my processes for ConvertKit and Stripe. Stripe is straightforward, making a single API call, which simplifies integration into a new workflow.

Conversely, ConvertKit requires one API request that generates two reports: a running subscriber count and another table with more detailed attributes. This two-step process involves ingesting API data and downloading a static monthly JSON file manually. While balancing daily loads with the monthly check isn’t overly complex, it does add an extra task to the final product's Directed Acyclic Graph (DAG).

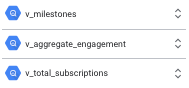

From Medium, I’ve created several views, though only two are shown here. The primary view I utilize to drive my dashboard is called v_story_stats, which aggregates multiple tables. Additionally, I maintain an aggregate_engagement view to generate similar reports on a monthly basis.

My staging area is relatively straightforward. Given that part of my process is inherently manual, I’ll likely continue to depend on a mix of cloud storage and Google Sheets for intermediate data storage. I use Cloud Storage for the aforementioned JSON data and Google Sheets for values that I can't retrieve from my API sources, such as whether a particular story has been boosted.

Data from these upstream and staging sources flows into BigQuery, where it fuels what are currently conventional views. These views create dashboards in Looker and produce the daily reports I skim through in under five minutes while I brew my morning coffee.

Section 1.2: Identifying Process Inefficiencies

My main critique of the current setup is its asynchronous nature. The cloud functions are scheduled to execute at specific intervals, views are queried as needed, and the sheets are updated separately. The issue lies in the lack of interconnectedness between these processes. For example, there is no upstream check to verify if my base tables have been updated, nor do I have a mechanism to trigger monthly processes based on the current date.

This disjointed setup has led to inevitable errors, resulting in the frustrating “No data available” message in both my BigQuery and Looker environments. To transform this haphazard collection of cloud functions into a cohesive workflow, I need to understand the timing of each process better.

To simplify this, I’m categorizing my processes into two groups:

- Daily: Medium ETL, ConvertKit

- Monthly: Stripe, Medium (historic data)

Additionally, I need to assess the downstream tables. Admittedly, my monthly dashboard is a significant offender, with far too many views currently supporting my Looker reporting.

In truth, I have an excessive number of views that are powering my final reports. To enhance processing speed, my ultimate objective will be to transition these into materialized views, or static tables.

This leads me to my current to-do list:

- Implement checks for daily data availability

- Materialize all views (and consolidate where feasible)

- Develop a DAG to orchestrate these processes intuitively and smoothly

Chapter 2: Insights for Aspiring Data Engineers

The first video, titled "Everyone's Data Infrastructure Is A Mess - The Truth About Working As A Data Engineer," delves into the common pitfalls and challenges faced by data engineers in today's landscape.

The second video, "Walking Through Data Infrastructure Migrations - With Real-Life Examples," provides practical insights and real-world examples of data infrastructure migrations.

As I conclude this exploration of my existing system, I want to share some valuable advice for those looking to solve problems and build intricate infrastructure at any level.

I cannot stress enough the importance of working through challenges on paper. A common mistake made by newer engineers, myself included, is diving into code and technology first. You can stare at a Python script for hours, yet you may not grasp it as well as you would by outlining your intended use case, the components of your build, and any related thoughts you may have.

For a deeper dive into my problem-solving method on paper, feel free to explore this resource.

If that approach isn’t yielding results, don’t hesitate to represent your information differently. I navigated my infrastructure needs through doodling, but you might find it more effective to use notecards or even discuss your ideas with a colleague. The key takeaway is that the medium doesn’t matter; what counts is the outcome.

When designing data systems, it’s crucial to focus on simplification. Specifically, aim to reduce:

- Run times, particularly for downstream applications

- Compute resources

- Costs

- Complexity

If you find yourself reaching a ceiling in optimization, I encourage you to read this article.

Although it may seem that I want to dismantle this process and start from scratch, the reality is that there are many excellent components already in place. The challenge lies in minimizing complexity and streamlining related processes.

In any problem-solving endeavor, I firmly believe that you cannot reach a solution without thoroughly understanding the obstacle you wish to overcome. This philosophy applies to my current exercise as well. While I could have created an architectural diagram or utilized a whiteboard, I opted for doodling because I appreciate a tactile, stream-of-consciousness approach.

By assessing my existing setup before attempting to orchestrate a flow, I can avoid a lot of confusion and frustration. In the near future, I plan to demonstrate how I will take this understanding and apply it to design and construct a DAG that optimizes my data ingestion.

But first, I need to locate another pen.

For over 200 additional resources in the data industry, follow Pipeline.